Asa growing number of businesses and organizations rush to unlock the value of massive amounts of data to derive high-value, actionable business insights via data analysis, they are also facing certain problems. Here are the most common problems that you’re likely to face when performing data analysis:

1. Data Preparation

Typically, data scientists spend almost 80% of their time in data clearing (or cleaning) to improve the quality of data by making it consistent and accurate before using it for analysis. But this task is extremely mundane and time-consuming. When you’re involved in data clearing, you’ll need to handle terabytes of data, across numerous sources, formats, platforms, and functions, on a daily basis while keeping an activity log to avoid duplication. Thus, manual data clearing is a Herculean task but you can’t ignore it because doing so could give rise to inaccurate data, which would make the output unreliable. This could trigger major negative consequences in case the analysis is used for decision-making.

A solution to overcome this problem could be automating manual data clearing and preparation tasks with the use of AI-enabled data science technologies, such as Auto ML and Augmented Analytics.

2. Feature selection

In the modern world, we’re surrounded by and sitting on massive piles of large-scale data that’s high-dimensional. As high dimensionality has its own curse, it’s important to lessen the dimensionality of such data. This is where feature selection can help by reducing the number of predictor variables, thus letting you build a more comprehensive and simpler model. A model with fewer variables is not just simpler to understand and train but is even easier to run while being less prone to be leaky. Thus, such a model can help in preparing clean, comprehensible data and improving learning performance.

When you handle a small number of variables, you won’t have a lot of problems with a predominantly hand-driven process. But when you have limited time on your hands and several variables to deal with, manual feature selection could be a pain. When handling big data, data velocity and data variety too could be big problems in feature selection.

Such problems can be solved with semi-automated or automated feature selection. For instance, to speed things up, you can use scikit-feature, which is an open-source feature selection repository that contains most of the popular feature selection algorithms that are used in recent times.

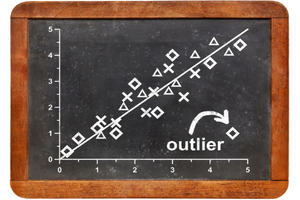

3. Outliers

Outliers are data points that are at an abnormal distance away from other data points. To put it differently, they’re values that are different from other values in a random sample. In data analysis, outliers can be problematic as they can distort real results or make tests to overlook significant findings. In data distribution with extreme outliers, the distribution can get skewed in the direction of the outliers. This would make it hard to analyze the data. But outliers aren’t necessarily a bad thing always.

If data values are obviously incorrect or impossible, you should definitely remove them. But sometimes when the data doesn’t fit your model, it could be your model that needs to be changed, not the data.

Sometimes, you can come across data sets for which finding appropriate models could be difficult. But this won’t validate discarding the data just because you can’t find a familiar model to fit it. The solution could be simplifying the analysis to use a nonparametric test.

Closing thoughts

If you want to learn more about these problems and how to solve them, enrolling in a data analysis course offered by a leading data science training institute would be a good idea.