Adversarial systems and game theory are becoming pivotal areas of research in the fast-moving world of artificial intelligence (AI). From strategic games like chess and Go to real-world applications such as autonomous vehicles, cybersecurity, and financial markets, AI is increasingly involved in competitive environments. This raises the need to understand and refine how these systems interact. In this context, we explore key concepts of AI game theory—how systems strategize, how to compete against them, and how to optimize for better performance. Interestingly, this mirrors how analysts approach sporting events, like Looking Back at UFC Fight Night 25, where evaluating strategy, timing, and adaptability offers deep insights into both human and machine decision-making.

The Foundations of Game Theory in AI

What is Game Theory?

The framework of game theory is a mathematical model for strategic interactions in which the interactive agents are assumed to be rational in the sense that they act in such ways as to maximize their utility. In cases where the outcome of the situation is subject to the actions taken by multiple decision makers whose own objectives are in play, it offers tools for analysis. The domain of game theory is used in the context of AI for modeling and forecasting of intelligent agents’ behavior in competing environments.

Key Concepts in Game Theory

- Players: The decision-makers in the game. Normally in AI, these agents or algorithms are autonomous.

- This is a set of possible actions that each player can take (strategies).

- Rewards or Penalties: The payoffs are the rewards or penalties associated with the game’s outcomes.

- Nash Equilibrium: A state in which no person gains by altering his or her strategy independent of other players’ strategies.

- Games where one player wins is equal to the losses of other players; this is taken as . It is precisely in many adversarial AI scenarios, e.g. chess or poker, that the game is a zero sum.

Game Theory in AI

Invariably, when we employ AI systems in environments where they must compete or collaborate with other auxiliary agents, they would be given toolboxes with which to make decisions. At the same time, these interactions can be expressed in a formal game theoretic framework, and algorithms that can take advantage of them can be constructed. For example, in multi agent reinforcement learning (MARL) agents learn to optimize their strategies according to the actions of other agents in order to have complex dynamics, which is analyzed using game theory.

AI Strategies in Competitive Environments

Minimax Algorithm

The minimax algorithm is one of the fundamental strategies in adversarial AI. Specifically, this algorithm is used to minimize the worst case loss in a two player zero sum game. Minimax algorithm in nutshell is recursive exploration of the game tree and select the best move assuming opponent is playing optimally, and in any scenario there is only one move which will result in the best outcome.

Example: Chess

Minimax algorithm is used by the evaluation of potential moves in chess remembering the best opponent’s response. We can estimate a value of each move of the tree and choose the move with greater chance of winning, if we can explore the game tree to a certain depth.

Alpha-Beta Pruning

Although the minimax algorithm works, it may become computationally expensive in games having large branching factors. Alpha beta pruning is a technique for optimization, that eliminates the need to evaluate the game tree nodes. Alpha beta pruning does that by taking away branches that never can influence the final decision so we can now search into the same amount of time deeper in the game tree.

Example: Go

The branching factor of the game of Go is much greater than in chess: exhaustive search is impractical. AlphaGo employs Alpha-beta pruning with heuristic evaluation functions, thus being able to analyze positions faster and take more effective strategic decisions.

Monte Carlo Tree Search (MCTS)

A probabilistic search algorithm for games with large state space — specifically, Go and poker — is Monte Carlo Tree Search. The search algorithm of MCTS consists of randomly sample possible game trajectory and then uses the results to steer the search towards more promising moves. As time goes on, the algorithm learns to put together a tree of possible moves, but the tree is focused on the moves that have resulted in a good outcome in the simulations.

Example: Poker

MCTS can also be applied to uncertainty, namely hidden information (e.g. other players’ cards). The algorithm essentially simulates thousands of different ways the game might play out to get an estimate of how much the possible action is worth for the player and picking the one which gives the best expected payoff.

Reinforcement Learning in Adversarial Settings

RL is a very powerful paradigm for training AI agents to make decisions in dynamic environments. RL agents learn in adversarial settings where they interact with the environment and receive feedback as rewards or penalties. Our goal is to learn a policy which maximises the time dependent cumulative reward.

Example: Dota 2

An overview of Ada in adversarial settings can be found in the example of OpenAI’s Dota 2 bots. The bots were trained using a mixture of supervised learning and reinforcement learning by playing (and losing) millions of games to themselves and developing strategies that outplayed human players. They also learned to work as a team, make split-second decisions, and adapt their strategies to opponents. If you are in Finland, you can google “verovapaat kasinot ilman rekisteröintiä“ or look for casinos that use this model to enjoy fast, secure, and tax-free gaming without the need for registration.

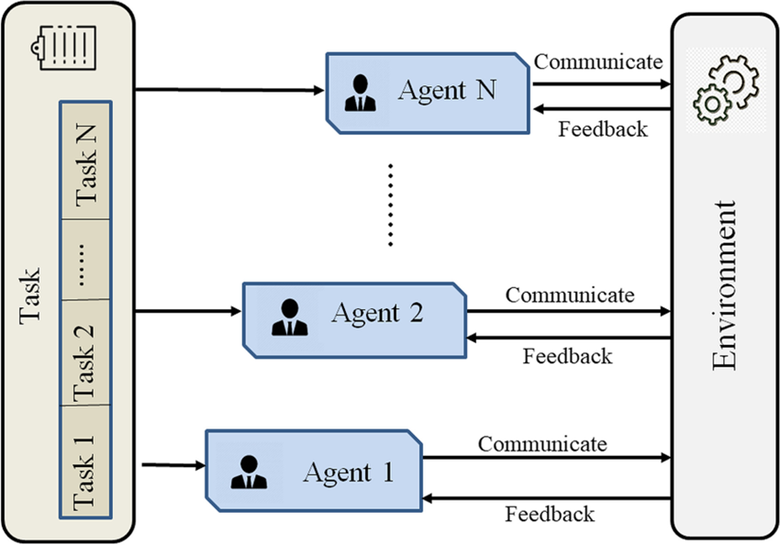

Multi-Agent Reinforcement Learning (MARL)

When there are multiple agents in the environment, the number of interactions becomes particularly complex. In MARL, we assume that the agents simultaneously learn and act. MARL shows a dynamic, non-stationary environment where the optimal strategy for one agent is dependent based on the strategies of the other agents.

Example: Autonomous Vehicles

For the problem of autonomous vehicles, MARL can be employed to represent how various self driving cars interact with one another on the roads. In order for each car to independently learn to navigate the environment without colliding with it and bargain its route with other vehicles, the first car should learn. These agents can learn cooperative behaviors like merging into traffic or walking across an intersection by the use of MARL algorithms.

Challenges in Optimizing Adversarial AI Systems

Scalability

Scaling down is one of the biggest challenges for adversarial AI. The more agents or more complex environment is, the more computational resource is required in modelling and optimizing strategies. For scaling adversarial AI, several techniques such as parallel computing, distributed learning and efficient search algorithms are essential.

Non-Stationarity

In the multi agent cases, environment is non stationary and the strategies of the agents are evolved in classification. Therefore, it is difficult for agents to learn stable policies, since the optimal strategy can change as other agents adapt. This challenge is being addressed through techniques such as opponent modeling and meta learning.

Hidden Information

The current class of environments, many of which have hidden information, is the adversarial environments. It also introduces uncertainty in which the agent will need to make decisions on some information. Examples of hidden information are modelled and reasoned about using techniques like Bayesian reasoning and information theoretic approaches.

Exploration vs. Exploitation

In reinforcement learning, there is the need to strike a balance between exploration (trying out new strategies to find the effects) and exploitation (using the known strategies to maximize the reward). As exploring can expose vulnerabilities that the opponent can exploit, this balance is especially hard in adversarial settings. To manage this trade off techniques such as epsilon greedy strategies, Thompson sampling, and intrinsic motivation are used.

Ethical Considerations

Since ethical considerations are more important the more capable AI systems are in adversarial settings, it is important to consider them for use in these systems. So, in the area of cybersecurity, for example, an AI system used to defend in a military context must not produce unintended consequence — in this case, the escalation of conflict or collateral damage. The problem of ensuring that adversarial AI systems are aligned with human values and ethical principles is a crucial one.

Optimizing Adversarial AI Systems

Transfer Learning

Transfer learning is a method of using the knowledge acquired in one domain to a different domain, which otherwise can be related. Transfer learning is one method for speeding up the learning in adversarial AI by utilizing strategies learned in one environment or game for enhanced performance in another. As an example, if an AI system trained to play chess is able to transfer some of its strategic knowledge to another game such as shogi.

Meta-Learning

Meta learning is the field of learning to learn and hence training an AI system to do the same for new tasks or new environments. Meta learning is useful in adversarial settings to create agents able to quickly adapt modalities to shift in these new opponents or new condition. It is particularly useful when there is a constantly changing dynamics.

Opponent Modeling

Predicting other agents’ strategies and intentions in the environment is referred to as opponent modeling. An AI system knows how to change its strategy because it can understand the behavior of opponents. To model opponent’s strategies, techniques like inverse reinforcement learning and Bayesian inference are used.

Robust Optimization

In such adversarial environments, it is important to develop strategies that are robust to uncertainty and variability. The goal of robust optimization is to come up with strategies that are relatively successful in a wide variety of possible scenarios than seeking an optimal solution in a restricted subset of conditions. This is especially important in real application when the environment may be uncertain.

Human-AI Collaboration

For a range of adversarial tasks, it is often the case that humans and AI systems can work together for maximum effectiveness. One such example is in cybersecurity where human experts supply domain knowledge and intuition complementing to the analytical capability of AI. Human–AI collaboration is an important area research for designing systems which allow for good collaboration.

Future Directions in Adversarial AI

Generalization Across Domains

Generalization across domains is considered one of the great challenges in adversarial AI. In essence, current AI systems are just as good at some games or environments and poor at others. This challenge is addressed through research in transfer learning, meta learning, and domain adaptation that allows for the AI systems to have more power to generalize what they have learned.

Explainability and Transparency

Above, as AI systems become more and more complex, we are more and more finding it harder to understand the process of how their decision is made. In high stakes applications such as cybersecurity and autonomous vehicles, explainability and transparency are especially important in order to build trust with adversarial AI systems. Interpretable machine learning and model-agnostic explanations are being explored as a way toward understanding AI systems.

Ethical AI in Adversarial Settings

An important problem as it relates to ethical principles is how to align adversarial AI systems. Part of this also involves designing systems that will avoid potentially damaging behaviours, ensure privacy, and are fair. Adversarial AI should enact values that are better for society as a whole and research in AI ethics and value alignment will help construct adversarial AI benefiting the society as a whole.

Real-World Applications

Adversarial AI and game theory have a lot of applications beyond the game. AI systems can be used for detecting and responding to the threat in real time in cybersecurity. AI can facilitate trading strategies in a competitive market in finance. AI can assist design personalized treatment plans in the context of uncertain patient responses in the healthcare industry. With these applications growing, a higher level of optimizing adversarial AI systems becomes more essential.

Conclusion

Adversarial systems optimization in AI is a very complex and multicultural challenge, which is based on strong game theory, reinforcement learning and multi-agent interactions. With some of the techniques such as minimax algorithm, Monte Carlo Tree Search and multi-agent reinforcement learning, AI systems start to play in more and more complex environments. The potential of adversarial AI is however limited by large challenges such as scalability, non-stationarity, and the ethical concerns.

Research in this field continues to progress, and we will see AI systems capable (in competitive settings) both more and more capable, and more and more adaptable, transparent, and aligned with human values. Advisories AI in the future promises to apply to all sorts of entertainment and critical real-world domains, which will ultimately further our ability to tackle the problems and make the decisions that we need in a increasingly interlaced world.

References

- Hazra, T., & Anjaria, K. (2022). Applications of game theory in deep learning: a survey. Multimedia Tools and Applications, 81(6), 8963-8994.

- Hazra, T., Anjaria, K., Bajpai, A., & Kumari, A. (2024). Applications of Game Theory in Deep Neural Networks. In Applications of Game Theory in Deep Learning (pp. 45-67). Cham: Springer Nature Switzerland.

- Hazra, T., Anjaria, K., Bajpai, A., & Kumari, A. (2024). Applications of Game Theory in Deep Learning. Springer Nature Switzerland, Imprint: Springer.