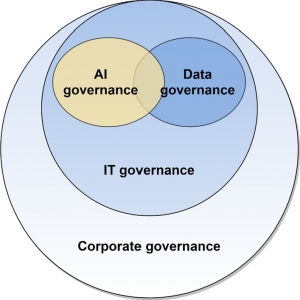

Machine learning and, more generally artificial intelligence, is new DNA which lies at the heart of most industries and revolutionizes the way we make choices. However, the development of AI systems and their use in various aspects of the public domain presents a range of ethical issues and potential risks, which need to be addressed [1]. AI governance frameworks play a crucial role in helping organizations to integrate and drive their AI applications to conform to correctness standards and/or regulations. In this piece, we discuss tangible approaches and derive frameworks for scaling governance of ethical AI implementation for the business sector and demonstrate how they can be applied.

(https://www.researchgate.net/figure/Artificial-intelligence-AI-governance-as-part-of-an-organizations-governance-structure_fig1_358846116)

Understanding AI Governance

AI governance concerns the set of rules that enable organizations to generate and apply AI technologies following best practices, legal requirements and ethical standards. AI governance is the process of promoting the transparency [2], fairness, and non-bias of AI’s, while avoiding adverse issues including bias, discrimination, privacy breaches and other adverse consequences.

(https://www.ovaledge.com/blog/what-is-ai-governance)

A robust AI governance framework typically addresses the following key aspects:

- Accountability: Defining responsibilities and mandate for AI governance, implying their precision.

- Transparency: To make sure stakeholders can understand how such systems make decisions.

- Fairness: The following one is avoiding potential discrimination effects that might arise due to some biased AI models.

- Privacy and Security: On privacy and security of user data and on protection of AI systems.

- Compliance: Compliance with possible laws and regulation and ethical consideration.

Challenges in Implementing AI Governance

The topic of AI governance appears to be obvious and clear, yet its incorporation into the real world proves to be difficult. Organizations face several hurdles, including:

- Lack of Standardization: Currently there is no standard approach to the governance of artificial intelligence, and hence a lack of coherence in how organizations undertake it.

- Complexity of AI Systems: AI models are complex and much of the decision-making process is either partially or entirely hidden from view, this makes it hard to account for some of the things done by the AI [3].

- Rapid Technological Evolution: AI continues to develop rapidly and there is often pressured to keep the governance frameworks detailed and relevant.

- Resource Constraints: Since AI is a new frontier, small organizations might not be able to implement good measures for AI governance.

The Future of Ethical AI Regulation

Create an AI governance framework

The first procedure in ethical AI governance is to have a broad structure that will illustrate the way in which the organization manages the risks posed by AI and encourages ethical standards. This framework should include:

- AI Ethics Principles: Establish the key values, on which people could agree to, like, the willingness to be fair, transparent, accountable and respect the privacy of the other party.

- Governance Structure: Find out the key features of the work of key actors of AI systems development and utilization, such as developers, data analysts [4], lawyers, and managers.

- Policies and Procedures: Create guidelines on how to conduct AI development, deployment and control in compliance with the set ethical standards.

Help to establish an ethical culture of Artificial Intelligence

Thus, ethical AI governance should be grounded in a change of culture within the enforcing organization. Leaders should promote a culture that prioritizes ethical considerations in AI projects by:

- Training and Awareness: Setting up a compliance program to ensure that all the employees participating in projects involving Artificial intelligence receive ethic and governance on the project.

- Ethics Champions: Selecting and training individuals within work teams to act as the safeguard of ethical decision making.

- Open Dialogue: Promoting visitors’ willingness to voice their concerns and share possible issues regarding organization’s AI systems.

Conduct Regular Ethical Assessments

Organizations should conduct regular assessments to evaluate the ethical implications of their AI systems. These assessments should cover:

- Bias Detection: Identifying and mitigating biases in AI models.

- Privacy Impact: Assessing the impact of AI systems on user privacy.

- Risk Analysis: Evaluating the potential risks and unintended consequences of AI applications.

Ensure Transparency and Explainability

- Model Documentation: Accepting and explaining the data sources and model development that led to an AI model’s creation.

- Explainable AI Tools: To use AI models in more scenarios, there is a need to incorporate tools that explain these models to people who are not necessarily unoriginated in computer science.

- User Communication: Having more transparent methodologies of operations and decision-making processes of the AI systems and giving the end users an understanding of what is being done.

Initiate Bias Elimination Practices

Given its nature, bias in AI means that the results will be unfair ad discriminatory at best. Bias identification and prevention efforts should be applied within the spectrum of the process of AI life cycle to promote fairness of AI systems.

- Diverse Data: Data is one of the main prejudices in AI systems. The performance of a model may be skewed if the sets of data used in training are not skewed in the same way towards both the adult population. To avoid this risk, the training data of an organization should include the different demographics, geographical locations together with the socio-economic status. Thus, frequent and systemized checks and data collection practices are necessary and sufficient to control the lack of representation.

- Fairness Audits: Preventing the biases in AI models is the key to their success, and that is why frequent fairness audits must be conducted [5]. These audits include reviewing the effectiveness of AI systems by comparing results of the different demographics. To measure fairness there are fairness metrics like disparate impact, equal opportunity, and demographic parity available for use by organizations.

- Algorithmic Fairness Tools: Some programs can be used to recognize and decrease biases that are present in the AI systems used by organizations. The tools mentioned here include IBM’s AI Fairness 360, Google’s What-If Tool, and Microsoft’s Fair learn which help to offer the description of operations of AI models across various groups. They can find that certain elements of data are more skewed compared to others and can recommend ways to fairness algorithm such as re-weighting data or putting fairness constraints in model. These tools can then be used to prevent bias in AI before the systems are applied in the field by organizations [6].

Strengthen Privacy and Security Measures

Privacy and security, thus, as profound constituents, should also be taken into consideration for the formulation of the types of ethical AI-governance. To specify, by addressing such aspects navigating organizations can gain trust of users and meet the standards of legislation.

- Data Anonymization: Fundamentally, the data anonymization workflows form the basis on which the protection of user privacy begins. This involves disguising or eliminating any information which would allow a person to be singled out when a data set is cross referenced. Some of the most common ways to anonymize data include generalization, suppression, and pseudonymization that enable the data to be used without compromising the information needed for the AI model. This guide also points out that organizations should also periodically examine and upgrade their anonymization procedures for new risks in respect of privacy.

- Secure AI Systems: For efficient and safe AI implementation strong protection policies should be applied to protect the systems from unauthorized access and information leakage. These features involve measures such as use of two factor identification, secure coding and applying generic access controls among others. Also, the organizations should consider carrying out frequent security audits to establish security risks to the AI technology [4]. These vulnerabilities now must be fully recognized and addressed to defend AI systems against cyber attackers and maintain the reliability of models along with related data.

- Compliance Checks: To have AI systems increase their compliance with legal such data protection laws like GDPR and/or CCPA, an organization must go through compliance checks frequently. This covers the definition or identification of legal criteria for AI practice and analysis of the gaps in the current practices. The compliance checks should include areas like collection, storage, processing and sharing the data to confirm to the management whether the organization is respecting the privacy laws or not.

Monitor and Audit AI Systems

It is also very important to monitor and audit AI systems frequently so as to continue to assess and reinforce the ethical standards. Organizations should:

- Automated Monitoring: Deploy AI surveillance to look for synthetic maladies and other threats that may related to those technologies.

- Regular Audits: Scheduled check & balance oftentimes helps determine whether an AI system is functioning as it should and whether the AI system has any potential of staining the company with ethical issues.

- Incident Reporting: The organization should therefore come up with a procedure to report and manage ethical occurrences pertaining to artificial intelligence systems.

Engage with Stakeholders

AI governance should involve input from various stakeholders, including employees, customers, regulators, and civil society organizations. Engaging with stakeholders can help organizations identify ethical concerns and build trust. Strategies for stakeholder engagement include:

- Public Consultations: Conducting public consultations to gather feedback on AI policies.

- Ethics Advisory Boards: Establishing ethics advisory boards to provide guidance on AI governance.

- User Feedback: Collecting and acting on user feedback to improve AI systems.

Case Studies of Successful AI Governance

Today there are many organizations that have applied positive experiences in the use of artificial intelligence governance frameworks. For example:

- Microsoft: Currently, Microsoft has set up AI Ethics and Effects in Engineering and Research (AETHER) Committee that provides AI governance as well as ethical approaches. This committee requires members from different fields of specialization such as engineering, legal, and policy to review AI projects and tackle the ethical issues. Besides, Microsoft created its internal policies that can be used by AI teams to construct ethical AI [5]; these are the Responsible AI Standard and the Fairness Checklist.

- IBM: On the AI governance aspect, IBM has an AI ethics board that must review all conceptualization of AI to check its compliance with IBM’s principles of responsible AI. It addresses AI ethics board for the company’s main areas of concern, which are fairness and transparency, accountability and privacy. IBM has also introduced an aspect of quite reasonable principles for trustworthy AI that require explanation, privacy, and freedom from bias. Encode these principles into practice for IBM and they developed tools such as AI fairness 360, AI Explainability 360 which assist organizations to identify bias in AI systems and increase the comprehensibility of the emerging models [7].

- Google: At the same time, the company has made public its set of AI Principles to follow when designing and implementing artificial intelligence technology and solutions. Such principles consist of refraining from designing gentle BI systems, making certain the systems are helpful to culture, and bearing obligation for them. Google has also set new internal controls, which are ORMA and ATC, for high-risk AI considerations, including the Advanced Technology Review Council. Moreover, the What-If Tool has been used to answer questions such as how a certain model performs on a particular demographic or where possible biases were identified [6]. Analyzing all the aspects of AI principles and governance of Google reveals that it is relevant to combine invention with responsibility in the approach to ALI.

The Role of Regulation in AI Governance

Ethical AI governance is partly a function of efforts being made by governments and regulatory authorities.” New regulation like the EU AI Act seeks to introduce legal parameters to AI control to ensure that firms promoting artificial intelligence practices are following the right procedures.

It is therefore important for organizations to monitor how regulator is changing on the use of AI and ensure that their AI governance framework is regulatory compliant [7].

Conclusion

Thus, AI governance is vital for achieving greater accountability for the development as well as the implementation of approaches and technologies connected to Artificial Intelligence. For this reason, organizations can create, select, and apply practical strategies that when realized in organizations, make up for the scale of ethical AI governance: Setting up a governance structure, promoting an ethical approach to AI, ethical audit, and assessment, transparency practices, biases, enhancing the privacy and security, and stakeholder involvement. AI is advancing and posing various new risks that businesses face and thus, the governance frameworks must be adjusted to meet emerging complexities. It is not only a moral responsibility, but it is now a business need for any organization that wants to create trust with customers and scale innovation in a responsible manner.

References

- Walz, A., & Firth-Butterfield, K. (2019). Implementing ethics into artificial intelligence: A contribution, from a legal perspective, to the development of an AI governance regime. Duke L. & Tech. Rev., 18, 176.

- Khanna, S., Khanna, I., Srivastava, S., & Pandey, V. (2021). AI Governance Framework for Oncology: Ethical, Legal, and Practical Considerations. Quarterly Journal of Computational Technologies for Healthcare, 6(8), 1-26.

- de Almeida, P. G. R., dos Santos, C. D., & Farias, J. S. (2021). Artificial intelligence regulation: a framework for governance. Ethics and Information Technology, 23(3), 505-525.

- Auld, G., Casovan, A., Clarke, A., & Faveri, B. (2022). Governing AI through ethical standards: Learning from the experiences of other private governance initiatives. Journal of European Public Policy, 29(11), 1822-1844.

- Daly, A., Hagendorff, T., Hui, L., Mann, M., Marda, V., Wagner, B., & Wei Wang, W. (2022). AI, Governance and Ethics: Global Perspectives.

- Lan, T. T., & Van Huy, H. (2021). Challenges and Solutions in AI Governance for Healthcare: A Global Perspective on Regulatory and Ethical Issues. International Journal of Applied Health Care Analytics, 6(12), 1-8.

- Xue, L., & Pang, Z. (2022). Ethical governance of artificial intelligence: An integrated analytical framework. Journal of Digital Economy, 1(1), 44-52.