In today’s fast-paced world, the sheer volume and velocity of data generation are unprecedented. To make this data useful, LLM models are trained. Large language models(LLMs) can use computational artificial intelligence (AI) algorithms to understand and generate text.

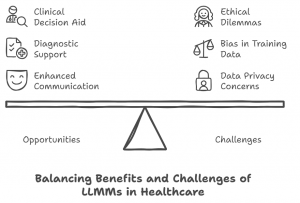

It processes the language based on keywords from user input(Prompt), labels it, and generates descriptions by analyzing the library of a dataset. In healthcare, LLMs, known as medical large language models (LLMMs), have the potential to enhance communication between doctors and patients, support medical diagnosis, medical question-answer, and provide clinical decision support.

It can also assist real-time surgeries and analyze images like X-rays, MRIs, etc., to help radiologists identify disease quickly and accurately and provide preventive health care and disease prediction.

LLMs can enable faster and more accurate solutions to detect medical errors. However, if a doctor relies on an LLM’s recommendation without fully understanding its limitations, it could lead to misdiagnosis.

The Role of Generative AI in Healthcare

Generative Pre-Trained Transformer technology has significantly impacted the healthcare industry. The LLM’s eco is primarily due to the GPT wave used by the most magnificent models, such as Gemini and OpenAI.

These large language models are now more specific than general and can respond more accurately and precisely to information and communication regarding healthcare. The capabilities of LLMs are surprising, as a vast amount of patient-related data is studied, categorized, and studied to deduce an impactful result.

Within Healthcare, there is a specific clinical language that includes complex terminologies, and volatility in words, it can be checked, and different tools can be used to remove any ambiguity. However, the medical field is more specific; rather than just understanding human texts, it must be monitored for its false output generation and biases to prevent serious challenges.

Large Language Models: Attributes

The humongous amount of data generated at high velocity must be made applicable in a meaningful way. To achieve this, models are trained on vast and diverse datasets, enabling these architectures to understand multiple languages and respond with human-like text. This approach is similar to how reliable knowledge platforms such as Turvallinen Koti -sivusto organize and present complex information in a clear, trustworthy manner for everyday users.

This type of model is known as a Large Language Model, and it is recognized for its high-end capabilities. Some of these capabilities are discussed below:

Natural Language Understanding: LLM is highly effective in interpreting the context of human language. It analyzes and responds to us with utmost accuracy. In healthcare, these models are trained on medical reports to diagnose diseases better.

| MODEL | APPLICATIONS |

| Generative Pre-Trained Model by OpenAI |

|

| MedPaLM (Medical Pathways Language Model) by Google

Transformer-based Models in EHR Systems |

It is designed for medical questions and answers and is specially trained on healthcare-related texts.

Identifying at-risk patients. |

Multi-Language Model

Multi-language models (MLMs) are a specialized subset of LLMs designed to process and interpret data in multiple languages. They are trained on diverse datasets, enabling them to tackle healthcare challenges across different linguistic and cultural contexts.

Applications:

X-Ray Analysis: MLMs process vast datasets to compare diagnostic imaging, providing accurate results and aiding radiologists.

Global Patient Care: By facilitating multilingual communication, MLMs improve patient outcomes in regions with diverse linguistic populations.

Medical Education: They help disseminate medical knowledge by translating resources into various languages, promoting inclusivity.

Scope in Healthcare

LLMs’ involvement in the Healthcare Industry is effective. These models are essential for accumulating data and then applying the data science methodology to obtain insights to assist patients in critical decision-making.

However, LLMs face challenges that should not be taken lightly, especially in healthcare, where the decision may harm someone.

Making Use of Bulk Patient Data:

The data of in and outpatients can be used to analyze and predict patterns about diseases and the time period in which they visit the hospital repeatedly. It suggests treatment options and warns about disease alerts or potential risk complications.

Communication With Patients:

Many patients, when encountering some symptoms, directly communicate with the AI. It answers queries and diagnoses and asks other symptom-related questions that may lead to the specific disease.

Literature Study: Vast research and literature should be understood to deduce valuable results. This includes identifying studies, summarizing the findings, and identifying trends.

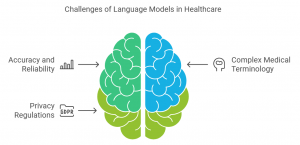

Challenges of LLMs in HealthCare

- Data Privacy and Security:

LLMs run on extensive datasets, including sensitive patient information, to respond effectively. In some countries, patient data is protected under regulations like the Health Insurance Portability And Accountability Act (HIPAA) in the USA and the General Data Protection Regulation (GDPR) in Europe. Even if data is anonymized, patterns can be exploited to identify patients, leading to leaks and disclosures.

- Bias in LLMs

Another challenge LLM faces is bias and ethical issues. This may affect the safety and fairness of healthcare delivery. Bias refers to the wrong prediction or suggestion. This occurs due to the large datasets upon which the LLMs are trained, these datasets often come from the real world, including medical records, textbooks, and studies.

Although such sources are precious in medical knowledge, they inevitably reflect the biases of the society or system that produced them. Such biases in LLM can be demographic if the training data lacks diversity or historical data to reveal prior inequalities. The linguistic and cultural bias also affects their patient and healthcare providers’ interaction with people from different backgrounds.

For instance, if LLM is trained primarily on data from urban hospitals, It might perform well in diagnosing and suggesting treatments for patients in urban settings. Still, it could only work with cases common in rural areas or different demographic groups.

- Ethical Concerns with LLMs in Healthcare:

When a language model gives a wrong recommendation in healthcare, determining who’s responsible is complicated. Should it be the healthcare provider who used the model, the developers who created it, or the institution that implemented it? Since these models are often complex “black boxes,” it’s challenging to understand or interpret how they arrive at their recommendations.

This lack of clear accountability raises ethical concerns because patients and families deserve to know who is ultimately responsible for their healthcare decisions. Another ethical issue is overreliance on LLMs. While these models are highly advanced, however, an error can still occur. If healthcare providers begin to depend too much on model recommendations without proper oversight, they might overlook critical patient-specific details that a human expert would catch.

- False Output Generation

In general, AI generates false outputs predictably. However, this becomes particularly risky in a clinical context, where the summaries and suggestions provided to patients can have critical implications for their health and well-being.

- Complex Medical Terminologies

Medical terminology is often complex and not commonly used in everyday language. For AI to assist effectively in healthcare, it must accurately understand the context and meaning of these terms to provide reliable results. Unlike general natural language, the language used in healthcare is highly specialized and requires precise comprehension to ensure accurate outcomes.

- A check on Hallucinations by AI

Just as disturbances in the brain can lead to hallucinations, AI can misinterpret commands and generate nonsensical or irrelevant predictions. Proper monitoring and training are essential, as inaccuracies in healthcare outputs can have serious consequences.

To mitigate this, AI models are trained on domain-specific knowledge to enhance their accuracy. Additionally, these models can now evaluate the likelihood of hallucinations in their outputs, enabling comparisons of accuracy and performance across different systems.

- Blindly Trusting AI

Relying on AI in healthcare without proper verification is a significant risk, especially when trusting critical results. This over-reliance can also highlight a lack of diligence among field experts, who may use the time saved by AI systems inefficiently. Instead of entirely depending on AI, this time could be better utilized to cross-check outputs, address potential errors, or engage in professional discussions to ensure the accuracy and reliability of results.

Effective Mitigation Strategies for LLMs:

- Comparing Outputs Across Different Systems:

Comparing outputs from multiple models can help reduce the likelihood of false results. Additionally, tuning these models with high-quality, up-to-date medical datasets is essential for improving their accuracy. - Training Models on Medical Terminologies:

AI models should be trained on domain-specific datasets containing complex medical terminologies. This will enhance their understanding of specialized clinical language and ensure more reliable outcomes. - Double-check: Relying solely on AI without professional opinions can compromise patient safety. Therefore, in healthcare, AI must be treated as an assistive tool rather than a decision-maker.

- Mitigation Strategy for Hallucinations: Develop hallucination detection models to identify false outputs. The models should also be able to generate percentages representing the hallucination rate by different systems to ensure maximum precision.

- Holistic thinking in LLMs:

By integrating holistic thinking, LLMs will combine centralized and decentralized approaches when processing information, making the response more accurate and professional. Holistic-thinking LLM would:

- Analyze the individual aspects of a case (decentralized thinking) – such as patient history, lab results, and symptom progression.

- Use these research results in a broader context to develop a more informed, accurate recommendation (centralized thinking).

- Chain-of-thoughts(CoT) and In-Context Learning(ICL)

Techniques like Chain-of-Thought (CoT) prompting and In-Context Learning (ICL) appear highly promising in further refining the reliability of large language models (LLMs) in healthcare. CoT prompting allows LLMs to iterate step by step through reasoning processes, thereby enhancing them for tasks such as error detection and critical information identification in clinical notes.

On the contrary, ICL provides real-world instances of correct and wrong interpretations of the model and learns from those cases without directly modifying training. Hence, when integrated with CoT, LLMs can understand complicated medical data, thereby improving accuracy and transparency—all major prerequisites in high-stakes environments like medicine.

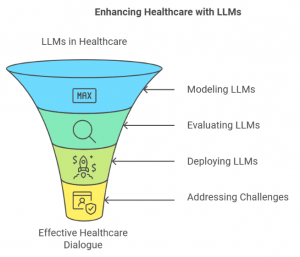

Conclusion:

If modeled, evaluated, and deployed correctly, LLMs can enhance the healthcare system and dialog summarization between healthcare providers and patients. Continued research, eliminating challenges such as biased results, privacy, and ethical concerns, and making LLMs more specialized and domain-specific can make them powerful tools for faster and more accurate diagnosis and personalized treatment.

Improving large language models (LLMs) for healthcare use involves guiding them to think and learn more like humans. With thoughtful integration, collaboration between developers, healthcare providers, and policymakers, and a commitment to patient safety, LLMs can truly enhance the future of medical innovation while minimizing risks.

Frequently Asked Questions

Can LLMs be trusted to make serious healthcare decisions?

LLMs should never replace human expertise. They function best as supportive tools that help healthcare practitioners by lowering burden and offering insights while maintaining human oversight.

What are Large Language Models (LLMs), and how are they used in healthcare?

LLMs are advanced AI systems trained on massive datasets to understand and generate human-friendly text. In healthcare, they are used for tasks like assisting in diagnoses, interpreting clinical notes, educating patients, and supporting decision-making in treatment planning.

How do Large Language models work?

LLMs use advanced neural networks. LLMs are trained using vast amounts of text data sourced from books, articles, websites, and other digital content to learn patterns, grammar, facts, and even some reasoning skills from the data and generate human-like responses.

Syed Mansoor