If you’re eyeing a career in machine learning and are especially interested in deep learning and neural networks (NNs),you should get ready to ace an extremely rigorous interview process. If you need help to sharpen your interview skills in machine learning algorithms, ensemble models, and other aspects of deep learning, these frequently asked interview questions with answers could be just what you need.

What is deep learning and how’s it different from other machine learning algorithms?

Deep learning is a subset of machine learning that uses a layered structure of algorithms, known as artificial neural network (NN), the design of which is inspired by the human brain’s biological neural network. Thus, a deep learning model can learn and make smart decisions by constantly analyzing data with a logic structure on its own, similar to what humans do.

In contrast, machine learning algorithms can parse data, learn from it, and make informed decisions based on such learning. Though machine learning models do become better in their targeted functions with time, they’ll still require some guidance. For instance, if an erroneous prediction is given by an AI algorithm, an engineer will have to intervene and make the necessary adjustments. But in a deep learning model, the algorithm can use its own neural network (NN) to decide on its own if a prediction is correct or not.

How many network layers will any deep neural network (NN) consist of?

Any neural network (NN) consists of at least three network layers, namely an output layer, a hidden layer, and an input layer. The hidden layer positioned between the algorithm’s input and output layers is the most vital layer as feature extraction occurs here, and adjustments are made for better functioning and faster training. When there are more than three layers with multiple hidden layers, this neural network is considered a deep neural network.

How can ensemble learning improve the performance of neural network (NN) models?

Since neural networks (NNs) have a high variance, developing a final model that can be used for making predictions could be frustrating. An effective solution is to reduce the variance of NN models by training multiple models (or ensemble models) instead of a solitary one and combining the predictions from all these models. This is ensemble learning, which not only decreases the variance of predictions but can even deliver better predictions than what a single model would do by averaging out biases and having lower chances of overfitting.

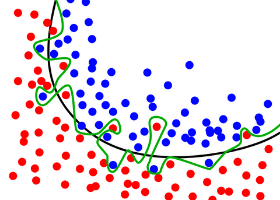

What do you mean by overfitting and underfitting?

Overfitting is a machine learning algorithm or statistical model that captures the noise of the data (which refers to data that’s irrelevant and redundant).

Underfitting is a machine learning algorithm or statistical model that doesn’t fit the data adequately well. This happens when the algorithm or model displays high bias but low variance.

What makes boosting a more stable algorithm than other ensemble algorithms?

The focus of boosting is on finding errors in earlier iterations until they become obsolete. But there’s no corrective loop in bagging. This is the reason that makes boosting a more stable algorithm than other ensemble algorithms.

Conclusion

Make the most of these interview questions to give your deep learning career the necessary assistance it needs.